The Data Dilemma: Cracking the Code of Data Movement for the Next Wave of Semiconductor Innovation

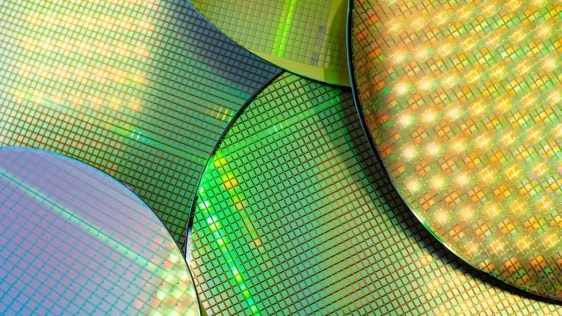

The priorities of the semiconductor industry have fundamentally shifted. In earlier generations of chip design, raw processing power reigned supreme, often exemplified by performance, power, and area (PPA) concerns. The availability of high bandwidth memory and processing in advanced nodes that stretch toward Angstroms, together with related advances in cache hierarchies, the expanding use of chiplets and multi-die chip scaling, have largely brought these capabilities in line with today’s cross-industry demands. Yet the overall performance can still be constrained by another often underappreciated pillar of computing. No matter how capable individual XPU clusters, memories, or logic blocks are, their advantages can be negated if the interconnected system cannot efficiently move data between them.

The interconnect network, also known as the network-on-chip (NoC), is at the heart of modern advanced semiconductors—with a dozen or more NoCs as part of each chiplet, and even more used in multi-die system-on-chip (SoC) devices, representing about 10% of the total silicon. These NoCs are responsible for ensuring that data is where it needs to be at the right time and within the physical constraints of the underlying silicon to ensure optimal performance.

The interconnect network, also known as the network-on-chip (NoC), is at the heart of modern advanced semiconductors—with a dozen or more NoCs as part of each chiplet, and even more used in multi-die system-on-chip (SoC) devices, representing about 10% of the total silicon. These NoCs are responsible for ensuring that data is where it needs to be at the right time and within the physical constraints of the underlying silicon to ensure optimal performance.

The more functions and processes that are moved onto the chip or chiplets, the more complex data movement becomes, especially when considering that all data is unique, tailored to its specific use case, and determined by the workloads and compute demands driving the task. At its core, data is information in context, meaning that a one-size-fits-all approach for routing it within a system is no longer sufficient, and neither are the legacy approaches that might have worked well enough just a few years ago.

Combine this with the exorbitant energy costs associated with data transport, which happens billions of times per second across millions or more data bits that move, and it becomes abundantly clear that the utility of modern chip architectures hinges on the efficiency of the data highways within them. High-demand use cases are growing across AI data centers, autonomous driving, and an expanding set of smart edge devices, from consumer electronics to robotics, and will continue to accelerate in 2026. As a result, many players within the industry are already realizing the need to prioritize optimized data movement under the hood, or risk not converging on the power and thermal constraints to manufacture such devices. This is to say nothing of the energy concerns, ranging from ballooning operating costs in data centers to battery costs and form factor on the edge, and hard bottlenecks on overall performance.

Related Chiplet

- DPIQ Tx PICs

- IMDD Tx PICs

- Near-Packaged Optics (NPO) Chiplet Solution

- High Performance Droplet

- Interconnect Chiplet

Related Blogs

- The Growing Chiplet Ecosystem: Collaboration, Innovation, and the Next Wave of UCIe Adoption

- Empower the Next Wave of Semiconductor Reuse Through Chiplet Realization

- Watching The Next Big Semiconductor Transition Unfold

- The Automotive Industry's Next Leap: Why Chiplets Are the Fuel for Innovation

Latest Blogs

- Empower the Next Wave of Semiconductor Reuse Through Chiplet Realization

- The Data Dilemma: Cracking the Code of Data Movement for the Next Wave of Semiconductor Innovation

- Thermal Advances Driving Next-Gen AI Chip Design

- Designing the Future: How 3DIC Compiler Is Powering Breakthroughs Across the MultiDie Design Landscape

- Building out the Photonic Stack